What’s “readable”? 16 readability metrics and what they miss

Factors affecting readability, detailed data on 16 readability metrics, and why I chose 6 to evaluate. Supplement to "Write on! 7 free tools for more readable writing on a shoestring".

My main post on readability (metrics, tools, and evaluations) is here (coming soon!). Four bonus pages provide details:

Readability features of Microsoft Word and why I’m looking for a new tool.

What “readable” means, 16 metrics, the 6 I chose to evaluate, and why. [YOU ARE HERE]

14 readability analysis tools besides Word, the 7 I chose to evaluate, and why.

Scoring for Word and the 7 selected tools on the 6 selected metrics. (coming soon!)

Page Contents:

What’s “Readable”?

In a nutshell, readability is about how easy it is for a reader to understand content.

Whether an article is highly ‘readable’ depends on many factors.

Some factors can be controlled by the writer - such as word choices, structure, and use of graphs or diagrams.

Content formatting and accessibility can also impact whether a reader can ‘read’ and understand the content. Features like audio voiceover, alt text, and support for screen readers can help here.

Other factors are beyond the writer’s control - such as context, prior knowledge, and interest in the topic. For a good explanation of these other factors, see this post by

on “Readability Assessment: Lessons from the Fence” 1.

As a writer, my focus is on the factors writers can control.

Readers’ skills, comprehension, and attention can vary widely. Conventional wisdom on readability 2 says that shorter sentences with simpler words are better for general audiences. Some fiction writers excel by flaunting that wisdom; perhaps they’re “the exception that proves the rule”. In general, readable content matches the simplicity of the writing to the expected skills of readers. (Most readability metrics map the output of a formula to a target “grade level” in the US education system.) Part of a writer’s challenge is to know their audience!

Standard advice on writing readable content includes format as well as wording, e.g. the four points in this advice on WordPress 3:

“Use clear and concise sentences: Keep your sentences short and avoid unnecessary jargon. Use simple language that your target audience can easily understand.

Break down your content: Divide your content into paragraphs with proper headings and subheadings. This helps readers navigate your article and find the information they’re looking for.

Utilize bullet points and lists: Bullet points and lists make information more scannable and easier to comprehend. They allow readers to grasp key points and main ideas quickly.

Optimize your vocabulary: Choose words that are appropriate for your target audience. Avoid using overly technical terms or complex vocabulary unless necessary. Remember, clarity is key.”

Established readability metrics only address the first and fourth points. Use of structural text elements and formatting - the second and third points - should be measurable and interpretable. But no one seems to have tackled these aspects yet in a readability metric.

Another aspect of helping readers understand content is use of “visual aids” (pictures, diagrams, or tables) 4. I didn’t find any established metrics that take into account the value and impact on reading ease of non-text or structural writing elements, even for online articles 5 6. One article advocates for short paragraphs 7, but none of the 16 readability metrics I surveyed use paragraph counts. Other guides for technical writing 8 focus on style and avoiding passive voice. (A few tools, including Word, calculate the percentage of passive voice sentences.)

Since “a picture is worth a thousand words”, ignoring use of images seems like a gap. It’s not an easy gap to address! Today’s and tomorrow’s AI might be useful for inferring what’s in a picture. But we’re a long way from AI accurately identifying whether a picture, graph, diagram, or table is “good”, i.e. what it contributes to a reader’s understanding.

TL;DR: Things that we count and calculate today - readability scores and grade levels - cover only a subset of what matters. However, they might still help us with improving readability of our content.

“All models are wrong, but some are useful” (George Box, 1978-79).

Data Used for Estimating Readability

Wikipedia 9 gave me a starter list of available readability metrics. Web searches for articles recommending how to choose a metric only yielded a few articles 10 11. Searching for readability analysis tools with good documentation on their metrics was more fruitful.

Counts used in text-based readability analysis tools can include characters, words, sentences, and syllables. Each of these counts has variants, e.g.:

Characters - including spaces, no spaces, only letters and digits (no symbols), letters only.

Words - Easy or hard/complex, abbreviated, hyphenated, proper nouns, multi-syllable verbs.

Sentences - short, medium, long, compound; passive voice or active voice.

Some metrics don’t use syllables. Others (Gunning Fog and SMOG) count the number of words with 1, 2, 3, 4, 5, 6, or 7+ syllables, and use these counts in metrics. “Polysyllabic” word counts include words with 3 or more syllables.

Some tools also count lines, pages, and paragraphs, and provide counts like the number of words in the shortest and longest paragraphs. These values generally aren’t used in readability scoring, though. The one exception is lines. Some tools detect when a high percentage of lines don’t end with punctuation (e.g. section titles or bullets). They can use the line count instead of the sentence count to get a more accurate metric.

Definitions and explanations on counting of “complex words” or “hard words” vary across the tools and metrics. Some metrics rely on syllable counts for complexity. Others flag 2-syllable verbs or hyphenated (compound) words. Others use word lists, such as this list of 10,000 common words 12.

6 Readability Metrics Selected (and Why)

Flesch Reading Ease (FRE) and Flesch-Kincaid Grade Level (FKGL) - Pair of metrics that use the same inputs (words, sentences, and syllables) with different formulas. Why? Classic baseline.

Automated Readability Index (‘ARI’) 13 - Counts sentences, words, and characters, but not syllables. Why? Simple to calculate; alternative view of complexity, considered better than Flesch for technical writing.

FORCAST Grade Level (FOR) 14 - Does not count sentences, and evaluates frequency of 1-syllable words. Why? Useful for technical training material.

Gunning Fog Index (‘Fog’) - Considers complex words which are “3 or more syllables” 15 or “contain three or more syllables that are not proper nouns, combinations of easy or hyphenated words, or two-syllable verbs made into three by adding -es and -ed endings” 16. Why? Blends average sentence length in words with a more complex focus on word choices, not just characters per word.

Linsear Write (LW) 17 - Counts simple words (1-2 syllables) and triple-counts “complex words” (3+ syllables), then divides the sum by the total number of sentences. Why? Simpler calculation than Fog, similar to SMOG.

SMOG Index (‘SMOG’) 20 - Evaluates use of words with 3+ syllables. (SMOG = “Simple Measure Of Gobbledygook”). Why? Similar to LW.

In theory, SMOG should not work on most of my posts. The conventional SMOG method requires at least 30 sentences. To date, only 29 of my 69 total posts meet that bar. However, all but one of the evaluated readability tools supporting SMOG gave a value for posts well under 30 sentences. 🤷♀️

For all of these metrics except the Flesch Reading Ease Score, the formula result is converted to a grade level in the US education system.

10 Readability Metrics Not Selected (and Why Not)

CEFR Test 21 - Rates readability against international proficiency levels (e.g. “CEFR B2”). This would be great to try, since my audience is global. Why not? The only available tool (Readable) isn’t free - not in my budget.

Coleman-Liau Index 22 - Relies on characters, words, and sentences. Why not? Commonly used in healthcare and law - not my domain - and doesn’t seem to add value over other selected metrics.

(New) Dale-Chall Readability Formula 23 - Uses a 3000-word list of “familiar” words 24. Why not? Designed for readers with elementary-level reading skills.

Fry Graph Readability 25 - Why not? Mostly used in education (elementary, middle, and high school in the US).

IELTS Test 26 - Rates readability against international tests. Like CEFR, this could be great for my global audience. Why not? The only available tool (Readable) doesn’t fit in my budget.

Lensear Write 27 28 - NOT the same as Linsear! - Developed for USAF. Credits monosyllabic words, strong verbs, and more sentences. Higher numbers reflect simpler writing; target is 70-80. Why not? Not available in any free tools.

Lix-Rix 29 - Good for non-English texts. Why not? I’m writing only in English.

Powers Sumner Kearl 30 - Why not? For children ages 7-10.

Raygor Graph 31 - Why not? Best used for middle school education.

Spache 32 - Why not? For children up to 4th grade.

I found a few other measures in articles, but with no details about formulas or tools which support them (e.g., “McLaughlin SMOG Formula”).

Some articles treat estimated reading time as a readability metric. Reading time estimates are useful, but not for improving quality of my writing. A high reading time might simply mean a long but highly readable article. Without knowing the formula, a reading time estimate wouldn’t be actionable anyway.

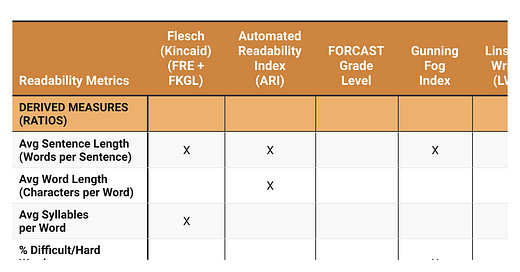

Summary of Selected Metrics and Required Data

TL;DR notes:

None of the selected metrics use paragraph-related data.

Only ARI uses character counts.

All but FORCAST use sentence counts.

Linsear counts complex words (3+ syllables) and simple (not complex).

Gunning Fog and SMOG indices count multi-syllable words.

FORCAST counts only 1-syllable words.

Final Observations

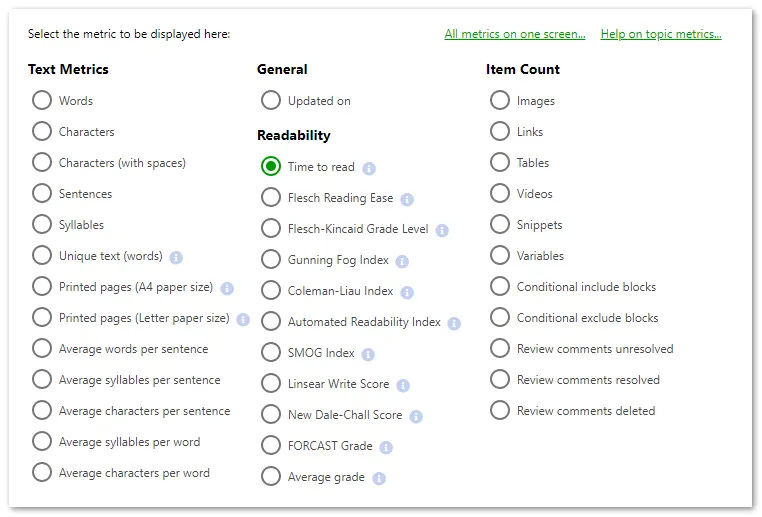

I did find a reference 33 to one platform for document authoring and workflow, ClickHelp, that appears to count use of images, tables, videos, and other elements, in addition to supporting multiple metrics for readability:

I’m not a ClickHelp user; the platform is overkill for my writing needs, and way out of my budget. So I don’t know how the tool is using these counts of visual aids and links. It would be interesting to see research into counting images, diagrams, and tables in posts, and correlating those counts with reader perceptions of readability. Perhaps some enterprising researcher will tackle studying this?

In the meantime, I’m moving forward with the metrics I’ve selected above for my writing.

References

“What is readability?”, Readable.com.

“What Is a Readability Score?” (themeisle.com)

“What are the readability metrics?”, by Nahid Komol

“From Paper to Pixels: Adapting Readability for Today’s Tech-Savvy Readers”, by Janice Jacobs, 2023-12-27

“Readability vs. SEO: Striking the Perfect Balance for Online Content”, by Brian Scott, 2023-12-27

“What Is a Good Readability Score and How to Improve It?” (phrasly.ai)

“Tips on Improving Technical Writing”, by Kesi Parker | Technical Writing is Easy, on Medium

Category Readability_tests, Wikipedia (it does not have all of the latest metrics, e.g. FORCAST, but is still useful as a first intro).

“What are the most effective readability metrics for different audiences?” - an “AI-powered article” on LinkedIn. It provides a brief overview of 6, but no real advice on choosing one. (Flesch Reading Ease, Flesch-Kincaid Grade Level, Gunning Fog Index, SMOG Index, Automated Readability Index, Coleman-Liau Index)

“Best Readability Score To Rank in Google?” (Originality.AI) recommends choosing FORCAST, Gunning Fog, Flesch Reading Ease, and Dale Chall for targeting higher Google search ranks. Their reason: they assert that SMOG, Coleman-Liau, Flesch-Kincaid Grade Level, and Automated Readability “lump college-level and higher scores into grade 12, which creates skewed data”. Since the usual advice is to aim for grade 8 or 9, not 12, I don’t see that this should stop one from using those metrics.

10,000 common words, referenced by “The Up-Goer Five Text Editor”

“Automated Readability Index” on Readable

“Gunning Fog Index”, Readable.com

“What Is the Gunning Fog Index?”, Free Readability Test Tool (webfx.com)

“What is the Linsear Write Formula and How Does It Measure Readability?” on Originality.ai

“LINSEAR Write Readability” (CheckReadability.com)

“Linsear Write formula” - Teflpedia

“SMOG Index” on Readable

“Coleman-Liau Index” on Readable

“New Dale-Chall Readability Formula” on Readable

“The Dale-Chall 3,000 Word List for Readability Formulas” – ReadabilityFormulas.com

“The Fry Readability Graph “ on Readable

“IELTS Test” on Readable

“The Lensear Write Readability Formula” on Readable

“Lensear Write Readability Formula” on Originality.AI

“The Lix-Rix Readability Formulas “ on Readable

“The Powers Sumner Kearl Readability Formula” on Readable

“The Raygor Readability Graph” on Readable

“The Spache Readability Formula” on Readable

“Readability Metrics and Technical Writing”, by Kesi Parker | Technical Writing is Easy, on Medium

Tip on making writing more readable by varying sentence lengths to "write music": https://substack.com/@susancain/note/c-58434850?r=3ht54r

Great post, Karen. You’ve got all of the meat off the bone of readability formulas in an efficient and concise manner. I know from experience that drawing a technical writer’s attention to hard words and complex sentence structures together with a practical way to measure and make decisions about revisions can lead to improved readability. Once long ago I taught a mini course for the Bloomington Fire Department to help them improve efficiency and functionality of memos, reports, and related docs. The logic of these basic formulas you’ve identified was useful to them. I agree with you that visual depictions of information done well can reduce text challenges to readers, but measuring effects in the absence of a grammar of graphs and figures is tough. Researchers may have made inroads that I don’t know about. AI may be helpful to future researchers trying to construct actionable advice for writers on graphic displays. One factor for which we have empirical evidence: the more concrete words (vs. abstract words) the more readable the text. This is true across the spectrum from beginning readers to advanced, and my bet is would hold up across languages. I don’t know of any formulas that take percentage of abstract words into account, but it’s worth eyeballing if a writer is searching for a way to reduce the difficulty of a long sentence with complex embedded clauses—word swaps may not reduce the number but can increase readability. Can’t recall which formula suggests aiming for level around 9.0, and I saw a suggestion not to use formulas that are more effective for elementary grades. There is a point where text that is “too simple” is actually a readability challenge primarily because logical relationships among clauses are not made explicit.