Write On! Choosing From 7 Free Tools For More Readable Writing On A Shoestring 🗣️

Explorations of measuring readability accurately enough to be useful for improving my writing on a shoestring budget - what tool I chose and why. (Audio; 21:22)

Most of us have to write for our jobs and daily lives. We write emails, letters, resumes, and so much more. Some of us even want to write (hello, Substackers 👋). I’m working this year on becoming a better writer. If you’re curious about better writing, too, read on!

During June, I’ve been on a side quest from my AI-focused writing, looking into tools for improving readability. This post summarizes what I’ve learned about readability, metrics, and available free tools.

TL;DR I’m now using one new tool and my data to help me improve the readability of my writing on an “ethical shoestring” budget. I might use two others in my monthly retrospectives if I can resolve some concerns about their AI ethics and privacy.

This is my main post on readability metrics, tools, and evaluations. Five bonus pages provide details.

Readability features of Microsoft Word and why I’m looking for a new tool.

What “readable” means, 16 metrics, the 6 I chose to evaluate, and why.

14 readability analysis tools besides Word, the 7 I chose to evaluate, and why.

Data on writing about data: Results from trying 7 online readability tools.

“Scuba dive” page on how the tools worked on the 5 evaluated cases.

Contents of this post:

Overview - what problem am I trying to solve, and what did I already try?

Approach - how am I going to solve it?

Data Analysis - what I did and what I learned.

Conclusions - what I decided and why.

What’s Next? - actions I’m taking as a result of the evaluation.

Appendix - big table summarizing tool strengths and weaknesses.

References

1. Overview

Improving my writing includes what I write about (content) and how I write (style). This post is about part of the how.

My Goal: Simpler, Clearer Writing.

I tend to write in long sentences. That style is generally accepted in research papers and technical reports, which I’ve written for 20+ years. However, the conventional wisdom on readability says shorter sentences with simpler words are better for general audiences. For the technical non-fiction I mostly write, the advice seems apt. Even Einstein strove to simplify his scientific writing; why shouldn’t I?

“Everything should be as simple as possible, but no simpler.” - Albert Einstein

So I’m looking for ways to understand:

how readable my writing is, and

whether I’m improving over time.

In “user story” format:

As a writer,

I want to continually improve the readability of my writing,

so that more people can understand and enjoy reading my articles.

Since I believe in using data, this goal means measuring and monitoring the readability of my posts. This side quest to find a tool is an action from my retrospectives on writing in April and May.

Caveat: metrics are inherently incomplete. True reading ease and comprehension involve many facets that readability metrics don’t count or consider. See “What’s “readable”? 16 readability metrics and what they miss” for more on this.

“Not everything that counts can be counted, and not everything that can be counted counts.” (William Bruce Cameron, “Informal Sociology: A Casual Introduction to Sociological Thinking”, 1963)

What I’ve Tried So Far

Initially, I used Microsoft Word’s “Readability Statistics” feature. After applying it to 3 months of writing (66 posts), I’ve found the tool flaky and awkward to use. (See details on this page about using MS Word.) I want a better tool.

Problem to be Solved

Here’s the specific problem I want to solve now to meet this need.

What tool(s) can I use to effectively measure and monitor the readability of my writing, with minimal effort and (preferably zero) cost?

WORD only supports the two Flesch readability metrics. The pair is classic and broadly applicable. However, other metrics may be more suitable for technical writing. Since I’m going to choose a new tool, I want to explore other metrics.

Constraints: affordability (free), interoperability (tool must be usable from a Windows laptop; browser is ok)

Non-Functional Requirements (NFRs): Data completeness, transparency, privacy, AI ethics, accuracy, ease of use, dynamic capacity, and performance (response time).

Functional Requirements (FRs): My requirements are simple: support for Flesch metrics plus other metrics that may suit technical writing better.

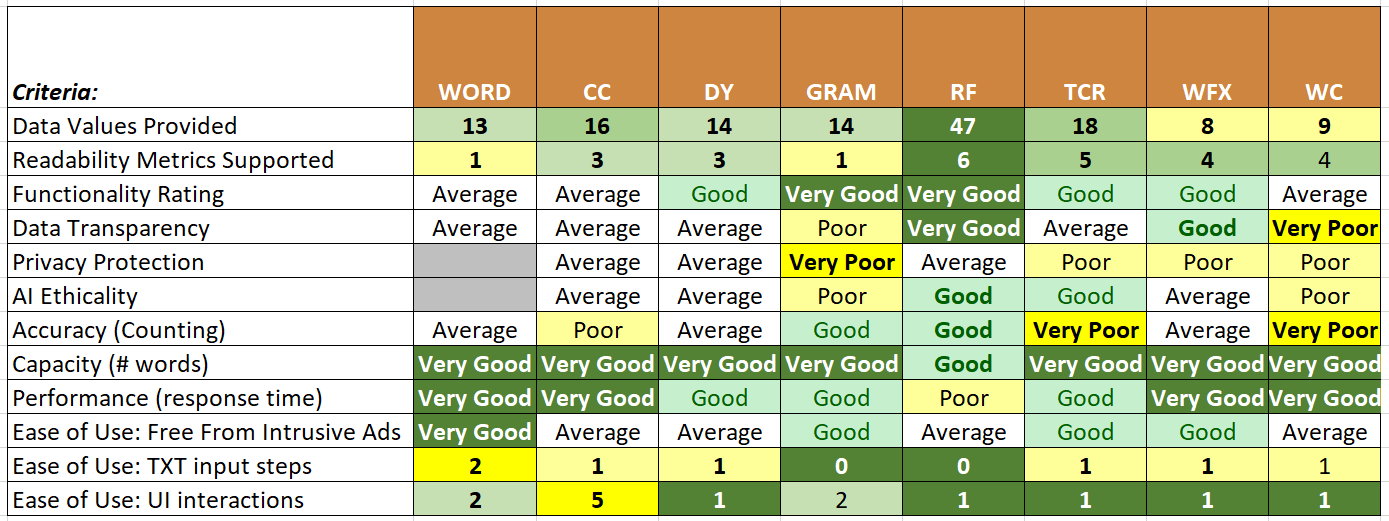

I’ll be filling in a table like this:

The writing and education industries already have many established standards for readability. To solve my problem, I’ll rely on their metrics and formulas for row 2. Solving my problem involved four steps (with some iterations).

Step 1. Select Readability Metrics

Purpose: Research available metrics for readability and select a relevant subset.

Outcome: Most readability tools generate a “grade level” in the US education system as their score. For general audiences, the standard advice is to aim for 8th grade. For technical audiences, 9 or a little higher may be ok.

The bonus post on readability metrics summarizes 3 topics covered in this step.

What readability means, what factors affect ease of reading, and what metrics do & don’t cover.

Details on the 6 metrics I selected and the data inputs their formulas need.

Details on the 10 metrics I didn’t select.

The six I selected are:

Flesch-Kincaid (‘FKGL’ and ‘FRE’) - Classic pair of metrics that use the same inputs (words, sentences, and syllables) with different formulas and meanings.

Flesch Reading Ease Score: 0-100, higher is better; aim for 60-70.

Flesch-Kincaid Grade Level: Lower means easier to read; aim for 8-9.

Automated Readability Index (‘ARI’) - Counts sentences and characters, but not syllables, and generates a grade level. Considered better for technical writing.

FORCAST Grade Level (‘FOR’) - Evaluates frequency of 1-syllable words and assigns a grade level. Designed for technical documents; does not count sentences (ending punctuation doesn’t matter).

Gunning Fog Index (‘FOG’) - Evaluates use of complex words and converts the score to a grade level.

Linsear Write (‘LW’) - Counts and weights simple and complex words per sentence, then translates this score to a grade level.

SMOG Grade Level (‘SMOG’) - Evaluates use of words with 3+ syllables and scores the required education level to understand the text. (SMOG = “Simple Measure Of Gobbledygook”1.)

Step 2. Select readability analysis tools for evaluation

Purpose: Research available tools that provide these 6 metrics for free, and select a subset of tools to evaluate & compare (to WORD and each other).

Outcome: I surveyed 14 tools and selected 7 to evaluate:

Character Calculator (CC)

Datayze (DY)

Grammarly (free plan) (GRAM)

Readability Formulas (RF)

Text Compare Readabilit (TCR)

WebFX Read-able (WFX)

WordCalc (WC)

Support for the 6 metrics I selected varies across the 7 tools:

All 7 tools support FRE and FKGL. Sometimes the FKGL value is not precise, though. The report might just say “at least 9th grade” or “grade 8-9”.

6 tools (all except GR) support SMOG and Fog.

4 of the 7 tools support the ARI metric.

Only RF and TCR support the LW metric.

RF supports all 6 metrics; it is the only free tool that supports FOR.

The bonus page on readability analysis tools gives a detailed mapping of tools to the readability metrics they support. The page also explains why these 7 tools were selected and 7 others were not.

Step 3. Evaluate Selected Tools on Example Data

Purpose: Run the 7 selected tools for the 6 selected metrics on varying post sizes.

I initially assumed that pretty much any of the tools would function well enough with the metrics they implemented. What I wanted to test were the NFRs (ease of use, security, privacy, capacity, affordability, performance, etc.) to see which tools fit my writing style and principles.

That optimistic assumption blew up almost immediately 😆. Have you ever heard the saying “Mann Tracht, Un Gott Lacht” (“Man Plans, and God Laughs”)? Yeah.

3.1 Selecting Evaluation Cases

I chose 5 posts across LinkedIn and Substack from the past 3 months. All were previously saved in a Word DOCX file as rich text.

Case 1 - LinkedIn post about Datawrapper article (under 100 words).

Case 2 - Substack AAaB post “Measuring writing effort” (~400 words).

Case 3 - LinkedIn post about my Substack post on ageism (~400 words).

Case 4 - Substack

post “More Than Melodies” (~2800 words).Case 5 - AAaB post on ageism “The older kids are not alright” (~4600 words).

I verified that all of the tools except RF can handle the biggest post. Then I truncated the Case 5 file to fit within RF’s 3500-word limit. Using this file ensured that my analysis on case 5 was “apples to apples” across all 7 tools.

3.2 Data Cleaning and Preparation

TL;DR To calculate the readability statistics and counts in Word and the other 7 tools, I used plain text files with emojis, no hashtags, and no endnotes or LinkedIn URLs.

Bonus page Data on Writing about Data: Results from trying 7 online readability tools explains some early observations about data quality and why I chose these settings.

3.3 What the Data Says

Given that my one big assumption went out the window so fast, here are the main questions I wanted the data to help me answer.

Q1. Which METRICS are calculated most consistently, and least consistently, by all tools that support them?

Q2. Which TOOLS produce

- the most accurate COUNTS, and

- GRADE LEVEL results that are most consistent with the consensus grade level across all tools?

See Data on Writing about Data: Results from trying 7 online readability tools for the details on my evaluations of the 7 tools. The tables in the post summarize the average readability ratings and normalized standard deviations from the 5 cases evaluated for the 7 tools.

The short summary fits one of my favorite quotes:

"In theory, there is no difference between theory and practice.

In practice, there is.” (Jan L. A. van de Snepscheut)

This dot plot shows how much the grade level metrics varied from one tool to another. A table view follows which also shows the FRE scores.

FRE shows the lowest variations. All tools but WordCalc were within +/-8% of the median for FRE.

For Grade Levels, CC, WC, and TCR all show strong bias away from the median for most or all metrics to varying degrees.

Step 4. Analyze the Findings

This lack of consistency in the counts and grade levels is puzzling. The formulas for the readability metrics are well-established standards. With “character count”, there may be room for confusion on whether the count is supposed to include spaces or digits. Counting syllables can be tricky in English, but not all metrics use syllable counts.

Variability in counting the number of sentences seems like the biggest factor. This may be inherent in the type of texts I put in my articles. I use section titles, bulleted and numbered lists, TOCs, and other non-sentences. (And I don’t intend to change that part of my writing style just to make a tool happy.) GRAM and RF seem to handle this well. Other tools, not so much.

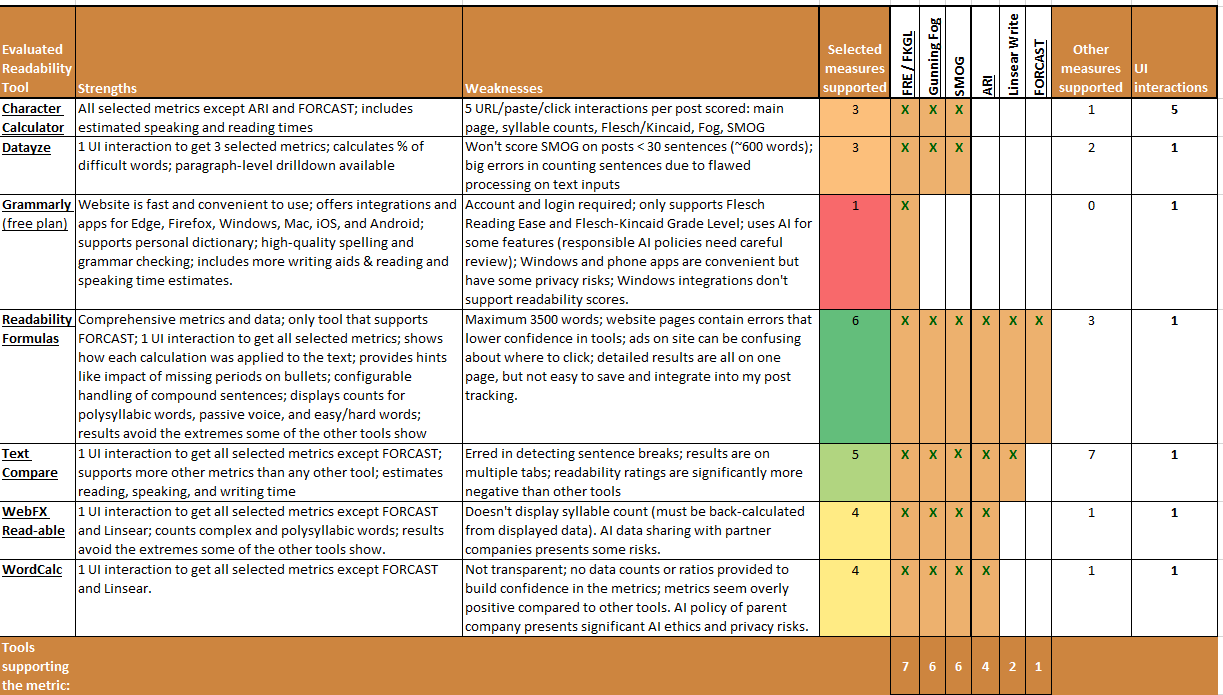

The Datawrapper table in the Appendix summarizes the strengths and weaknesses of the 7 evaluated free tools.

In addition to looking at the data for the counts and metrics, I compared each tool to my evaluation criteria, including the NFRs. Here’s the completed table.

4. Conclusions

Seeing so many differences across the counts and readability values calculated by Word and the 7 evaluated tools was unexpected. Fortunately, my new readability measurement toolset doesn’t have to be perfect. It just has to be good enough to indicate if I’m improving or backsliding.

Based on the data and functional findings, I discarded the extremes: TCR, WC, and CC.

First choice: Readability Formulas.

If I routinely wrote posts > 3500 words, I’d probably have to choose WFX or DY. Since I don’t, RF seems most likely to work well for my needs and type of writing. Its ethical posture seems solid, and its pluses are:

Accuracy: RF automatically tries to recognize “unpunctuated lines” and can use line counts instead of (punctuated) sentence counts in the metrics. That shows an understanding of what’s going on in these formulas when applied to real-world texts. It also fits better with the style and formatting of what I write. Other tools might do this, but if so it’s under the hood - they never say so.

Configurability: RF is highly configurable, e.g. I can customize how compound words and sentences are handled.

Traceability: RF transparently shows each of the calculations and shows all of the count and ratio data that went into each formula. I appreciate that. (It doesn’t always show how a calculated score converts to a grade level, though.) WFX does this too.

RF handles more readability metrics than any other free tool, including some designed for technical documentation (e.g. LW). It’s the only free tool that (1) calculates FORCAST and LW, and (2) provides detailed counts for words up to 7 syllables.

RF includes other tools for improving writing quality, not just readability metrics. Among other things, they have a “Lexical Density / Diversity” tool that I’m going to check out.

Of possible interest to my Spanish-speaking friends: Readability Formulas offers a companion Spanish Readability site that assesses readability of texts written in Spanish. They offer over 10 readability metrics to choose from which are designed for Spanish!

Second Choice?

RF looks less accurate on the Flesch metrics than I would like. And it doesn’t provide everything - i.e., it doesn’t offer reading or speaking times. A second tool might complement RF.

Looking at which tools provide the data RF doesn’t have, and my NFRs, it’s a tossup among GRAM, WFX, and DY. All include a few data measures RF doesn’t. GRAM and WFX have reading and speaking times. These times are useful; Substack doesn’t show a time estimate until after a post is published.

Grammarly showed higher variation on the only grade level it supports, FKGL - probably because they only display it to the nearest whole grade. GRAM also had the hurdle of requiring an account. Once it was set up, it was fairly easy to interact with2 . The site is fast and handles pasted rich text well, straight from the Substack editor to Grammarly’s UI. If their use of AI is ignored, then its many spelling, grammar, and style features are a nice bonus, even if I don’t rely on them. Privacy and ethics are the main concerns with GRAM. They offer assurances that user data is not sold. However, they’re almost certainly using user-submitted texts for training their AI, specifically for style.

Aside from GRAM, both WFX and DY are 1-page UI interactions. WFX provides transparency into the formulas and values used, and mostly avoided the extreme scores that some of the other tools gave.

DY privacy and AI ethics are less concerning than WFX or GRAM. DY has some interesting tools. However, the “rare word” counts are all it adds to RF’s counts. It doesn’t estimate reading and speaking time.

I’m not sure the ethical risks plus the small gains warrant the extra effort of making TXT input for WFX or DY and using the tools. For now, I’ll reserve them for spot checks in my monthly retrospectives. And I’ll continue to monitor GRAM’s Responsible AI policies.

5. What’s Next?

Here’s my 5-point plan for acting on these findings.

Add Readability Formulas to my “Ethical Shoestrings” page for reference (done).

Acquire & install (if appropriate) my chosen tools (done).

RF - browser only - no installation needed, just a bookmark.

Use RF during June to retroactively score and size my posts to date (partly done, more to do).

Change the RF default for compound sentences (treat as one) to match what the help text recommends (treat as two).

For readability scoring purposes, I want to try excluding URLs in LinkedIn posts and excluding footnotes in Substack. (I’ll still track footnote counts for planning and estimation purposes.)

Track my use of passive voice. RF also counts “Passive Voice Sentences” and “Active Voice Sentences” and calculates the ratio. My average for the 5 cases is 3.4%, which seems reasonable.

Work on varying my sentence lengths 3 to keep it interesting 4.

RF counts “Short Sentences”, “Medium Sentences”, and “Long Sentences”. These values aren’t in any known readability metric. I can still use them to help me track variability of my sentence lengths. For the 5 cases, my data shows averages of 24.4 short, 29.2 medium, and 20.6 long sentences. (I don’t know their definitions of short, medium, and long - will ask.)

Both Grammarly and RF provide data for ASL (average sentence length = words per sentence). For my 5 cases, the averages were 17.46 and 17.40. That’s well beyond the guidance that “sentences of 14 words or fewer are typically understood by 90% of readers”. I need to look for guidance that’s more relevant to technical writing, though.

Keep an eye out for other ways to improve my writing (ongoing). One possibility: checking my use of “hard words”. RF says my average for these 5 cases is 14.3%. I might want to try to reduce that. First, I’ll see what my new data says.

In my next agileTeams retrospective (early July), I’ll share how I’ve handled rich text and URLs, what I’ve learned, and how these two tools have worked for me so far. I’m curious to see if the new tools and scores show different shapes or trends on my readability graphs vs. the Word scores. See you there 🙂

What do you think? Do you have any experiences or recommendations on preferred tools or tactics for improving readability of Substack posts?

6. Appendix

7. References

Related Substack Content

End Notes

“Gobbledygook has got to go”, John O’Hayre, 1966.

I tried Grammarly’s Edge extension and Windows integration. They were convenient, but neither widget has Readability scoring - only spelling, grammar, and style checking. And the persistent popups prompting me to try their AI features (and upgrade to Premium) quickly turned me off. So I uninstalled the Windows tools and only used their web page during this evaluation.

“LawProse Lesson #269: Average sentence length”, by Jason Warren on lawprose.org, 2016-12-14.

“Why you should vary your sentence lengths - Writing Techniques” (prowritingaid.com)

![Using MS Word for Readability Analysis [Bonus page]](https://substackcdn.com/image/fetch/$s_!4GFP!,w_140,h_140,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F592d16c2-c2e9-4e79-a821-8e1f24d2a895_1165x599.png)

I discovered from @katiebeanwellness that the Substack post draft window has icons at lower right that will let us see the word count and estimated reading & speaking times! That takes away one of my motivations to use a second tool such as Grammarly, in addition to Readability Formulas. Thank you, Katie 😊